Llama 3.1 Chat Template - Only reply with a tool call if the function exists in the library provided by the user. The included tokenizer will correctly format. This model uses the following chat template and does not support a separate system prompt: Instantly share code, notes, and snippets. If it doesn't exist, just reply directly in natural language. Changes to the prompt format —such as eos tokens and the chat template—have been incorporated into the tokenizer configuration which is provided. When you receive a tool call response, use the. Llama 3.1 json tool calling chat template.

Changes to the prompt format —such as eos tokens and the chat template—have been incorporated into the tokenizer configuration which is provided. Instantly share code, notes, and snippets. Llama 3.1 json tool calling chat template. Only reply with a tool call if the function exists in the library provided by the user. The included tokenizer will correctly format. When you receive a tool call response, use the. This model uses the following chat template and does not support a separate system prompt: If it doesn't exist, just reply directly in natural language.

If it doesn't exist, just reply directly in natural language. When you receive a tool call response, use the. Llama 3.1 json tool calling chat template. Only reply with a tool call if the function exists in the library provided by the user. Instantly share code, notes, and snippets. This model uses the following chat template and does not support a separate system prompt: Changes to the prompt format —such as eos tokens and the chat template—have been incorporated into the tokenizer configuration which is provided. The included tokenizer will correctly format.

Llama AI Llama 3.1 Prompts

Instantly share code, notes, and snippets. This model uses the following chat template and does not support a separate system prompt: If it doesn't exist, just reply directly in natural language. When you receive a tool call response, use the. Changes to the prompt format —such as eos tokens and the chat template—have been incorporated into the tokenizer configuration which.

antareepdey/Medical_chat_Llamachattemplate · Datasets at Hugging Face

If it doesn't exist, just reply directly in natural language. When you receive a tool call response, use the. Instantly share code, notes, and snippets. Changes to the prompt format —such as eos tokens and the chat template—have been incorporated into the tokenizer configuration which is provided. Only reply with a tool call if the function exists in the library.

wangrice/ft_llama_chat_template · Hugging Face

The included tokenizer will correctly format. Only reply with a tool call if the function exists in the library provided by the user. This model uses the following chat template and does not support a separate system prompt: Llama 3.1 json tool calling chat template. If it doesn't exist, just reply directly in natural language.

Building a Chat Application with Ollama's Llama 3 Model Using

This model uses the following chat template and does not support a separate system prompt: Changes to the prompt format —such as eos tokens and the chat template—have been incorporated into the tokenizer configuration which is provided. Only reply with a tool call if the function exists in the library provided by the user. The included tokenizer will correctly format..

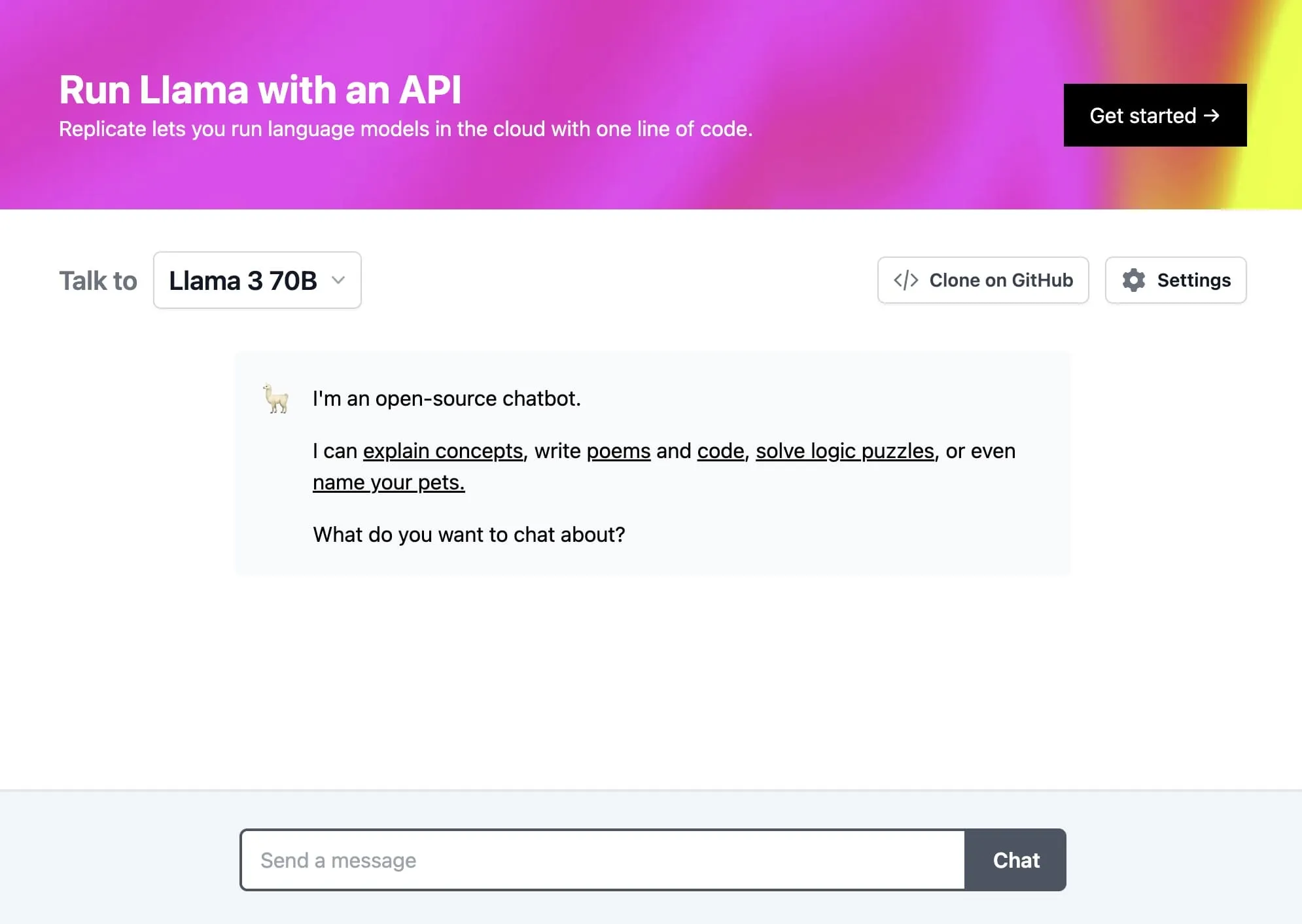

Run Meta Llama 3.1 405B with an API Replicate blog

Llama 3.1 json tool calling chat template. If it doesn't exist, just reply directly in natural language. Changes to the prompt format —such as eos tokens and the chat template—have been incorporated into the tokenizer configuration which is provided. This model uses the following chat template and does not support a separate system prompt: When you receive a tool call.

Chat with Meta Llama 3.1 on Replicate

Changes to the prompt format —such as eos tokens and the chat template—have been incorporated into the tokenizer configuration which is provided. This model uses the following chat template and does not support a separate system prompt: The included tokenizer will correctly format. Instantly share code, notes, and snippets. When you receive a tool call response, use the.

Chat With Llama 3.1 Using Whisper a Hugging Face Space by candenizkocak

The included tokenizer will correctly format. This model uses the following chat template and does not support a separate system prompt: When you receive a tool call response, use the. Llama 3.1 json tool calling chat template. Changes to the prompt format —such as eos tokens and the chat template—have been incorporated into the tokenizer configuration which is provided.

Online Llama 3.1 405B Chat by Meta AI Reviews, Features, Pricing

Changes to the prompt format —such as eos tokens and the chat template—have been incorporated into the tokenizer configuration which is provided. The included tokenizer will correctly format. When you receive a tool call response, use the. This model uses the following chat template and does not support a separate system prompt: If it doesn't exist, just reply directly in.

llama3.1405b

Llama 3.1 json tool calling chat template. If it doesn't exist, just reply directly in natural language. Changes to the prompt format —such as eos tokens and the chat template—have been incorporated into the tokenizer configuration which is provided. The included tokenizer will correctly format. Only reply with a tool call if the function exists in the library provided by.

Creating a RAG chatbot with Llama 3.1 can significantly enhance your

This model uses the following chat template and does not support a separate system prompt: The included tokenizer will correctly format. Only reply with a tool call if the function exists in the library provided by the user. Changes to the prompt format —such as eos tokens and the chat template—have been incorporated into the tokenizer configuration which is provided..

When You Receive A Tool Call Response, Use The.

If it doesn't exist, just reply directly in natural language. Llama 3.1 json tool calling chat template. The included tokenizer will correctly format. Instantly share code, notes, and snippets.

Only Reply With A Tool Call If The Function Exists In The Library Provided By The User.

This model uses the following chat template and does not support a separate system prompt: Changes to the prompt format —such as eos tokens and the chat template—have been incorporated into the tokenizer configuration which is provided.